Situation

You stumble upon a nice Large Language Model (LLM) primer and seek to persist its various definition of terms to a Knowledge Graph that manifests a Semantic Web.

How Do You Pull This Off?

In this new world driven of LLM-based Chatbots, it boils down to a natural language sentence, as per the following prompt.

Generate a glossary of terms from https://kraftful.com/blogs/llm-a-primer using JSON-LD.

Results

OpenAI’s ChatGPT with Web browsing plugin enabled

Note: produces a solution with a minor rendering error was easy to fix i.e., what follows is the edited result.

## JSON-LD Start ##

{

"@context": "https://schema.org",

"@type": "DefinedTermSet",

"name": "LLM Glossary",

"description": "Glossary of terms related to Large Language Models (LLMs)",

"hasDefinedTerm": [

{

"@type": "DefinedTerm",

"name": "Foundation models",

"description": "An LLM that serves as the basis or starting point for developing more specialized models tailored for specific tasks or industries."

},

{

"@type": "DefinedTerm",

"name": "GPT or Generative Pretrained Transformer",

"description": "A series of LLMs developed by OpenAI."

},

{

"@type": "DefinedTerm",

"name": "Neural networks",

"description": "A type of machine learning model inspired by the human brain. LLMs are a specific type of neural network known as transformer-based models."

},

{

"@type": "DefinedTerm",

"name": "Gradient descent",

"description": "The process used to train neural networks, including LLMs, by iteratively adjusting the model's weights to minimize the loss function."

},

{

"@type": "DefinedTerm",

"name": "Pre-training",

"description": "This is the initial phase of training, where the model learns to predict the next word in a sentence."

},

{

"@type": "DefinedTerm",

"name": "Fine-tuning",

"description": "This is a process where the pre-trained model is further trained (or 'tuned') on a specific task. Fine-tuning allows the model to adapt its general language understanding to a specific use cases and to align its behavior more closely with human values."

},

{

"@type": "DefinedTerm",

"name": "Alignment",

"description": "The process of ensuring that the model's output aligns with human values and goals."

},

{

"@type": "DefinedTerm",

"name": "Training Corpus",

"description": "This refers to the massive amount of text data that the model is trained on. It includes books, articles, websites, and other forms of written language."

},

{

"@type": "DefinedTerm",

"name": "Parameters",

"description": "Internal variables that the model uses to make predictions. The more parameters, the bigger the model."

},

{

"@type": "DefinedTerm",

"name": "Tokenization",

"description": "Breaking down text into words or parts of words, which the model can process."

},

{

"@type": "DefinedTerm",

"name": "Prompt engineering",

"description": "This is the process of designing the input (or 'prompt') given to an LLM to elicit a desired response."

},

{

"@type": "DefinedTerm",

"name": "Context window or sequence length",

"description": "The number of tokens that the model can take as input at once."

},

{

"@type": "DefinedTerm",

"name": "Hallucinations",

"description": "Describes instances where an LLM generates output that is not grounded in its input data. This can often lead to the generation of factually incorrect or nonsensical stuff."

},

{

"@type": "DefinedTerm",

"name": "Zero-shot learning",

"description": "This is the ability of the model to understand and perform tasks that it was not specifically trained on. It uses its general understanding of language to make inferences and predictions."

},

{

"@type": "DefinedTerm",

"name": "One or few-shot learning",

"description": "In one-shot learning, the model receives a single example, and in few-shot learning, it receives a small number of examples."

},

{

"@type": "DefinedTerm",

"name": "Agent",

"description": "In the context of LLMs, this refers to an implementation of a language model that is designed to interact with users or other systems, like a chatbot or virtual assistant."

},

{

"@type": "DefinedTerm",

"name": "Embedding",

"description": "A vector representation of words that can be used to determine how similar that text is to embeddings of other text. Words used in similar contexts have embeddings that are closer in the vector space."

},

{

"@type": "DefinedTerm",

"name": "Vector database",

"description": "A specialized type of database designed to efficiently store and manage embeddings. The key feature of a vector database is its ability to perform efficient similarity search."

},

{

"@type": "DefinedTerm",

"name": "LLMOps",

"description": "This term refers to the operational aspects of deploying and managing LLMs in production, including model training, deployment, monitoring, and troubleshooting."

},

{

"@type": "DefinedTerm",

"name": "Open-source language models",

"description": "These are models that are made available for public use and modification, so they can be deployed on-premise or in a private cloud."

},

{

"@type": "DefinedTerm",

"name": "Multimodal models",

"description": "Models that combine language with other types of data, like images or sound."

}

]

}

## JSON-LD End ##

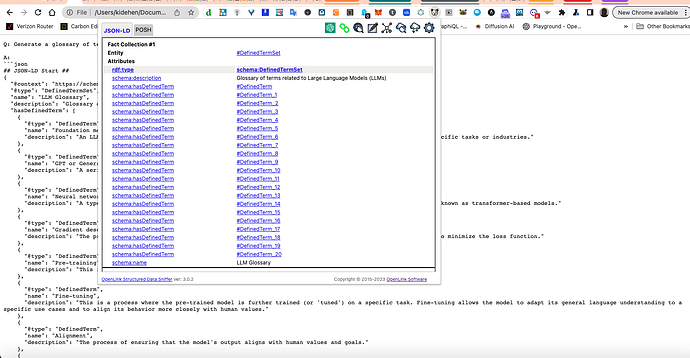

OpenLink Structured Data Sniffer Screenshot 1

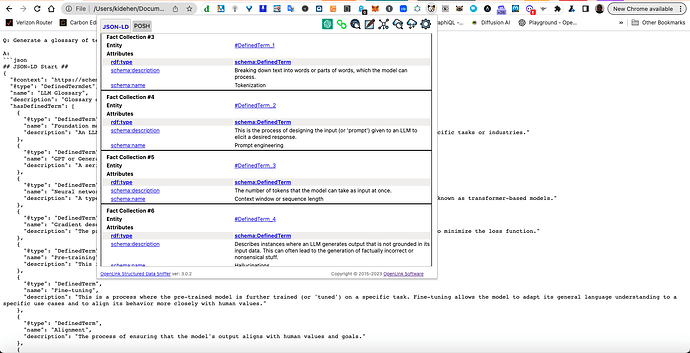

OpenLink Structured Data Sniffer Screenshot 2

Related

- How to use SQL query templates to fine-tune ChatGPT — showcasing how the unique functionality of our multi-model Virtuoso platform enables SQL-based fine-tuning of the GPT LLM

- ChatGPT and DBpedia SPARQL Query Generation from Natural Language Prompts — showcasing how to fine-tune ChatGPT using SPARQL query templates for DBpedia. This loosely-coupled approach works with any SPARQL-accessible LODCloud Knowledge Graph.

- Comparison: Google Bard Vs. Microsoft Bing + ChatGPT Vs. OpenAI ChatGPT — Google Bard wins SemanticWeb functionality precision test, compared to Bing + ChatGPT and OpenAI ChatGPT (with crawling enabled).

- Web Crawling via Bard, Bing + GPT, ChatGPT, and ChatGPT + Virtuoso Sponger — A comparative web page crawling exercise regarding functionality provided by OpenAI’s ChatGPT, Bing + GPT, and Bard.

- Using ChatGPT to Generate a Virtuoso Technical Support Agent

- Using SPARQL Query Templates to Fine-Tune ChatGPT’s Large Language Model (LLM) — with live examples of using this functionality to enhance the discovery of data across knowledge graphs associated with SPARQL Query Service endpoints.

- There’s a powerful symbiosis between ChatGPT and a Semantic Web (public or private) that is not immediately apparent. See Querying Knowledge Graphs across the Web using ChatGPT and SPARQL for a live demo-centric post about this important issue.

- Using ChatGPT to Generate Enhanced SQL and SPARQL Cheatsheets — ChatGPT is a powerful tool for productively acquiring new skills while reinforcing existing know-how. This post provides a ChatGPT transcript comprising prompts that generate SQL and SPARQL Cheat Sheets enhanced by hyperlinks.

- Using ChatGPT Web Browser Plugin to Generate RDF from a Crawled Web Page

- ChatGPT and Generating DBpedia SPARQL Queries from Natural Language Prompts

- Generating a Prompt Ontology using ChatGPT

- Generating an AI-Tutor using ChatGPT4

- Generating Instant DBpedia Lookups and Interactions using ChatGPT

- Using ChatGPT to Generate a Sequence Diagram and Knowledge Graph about the TLS Protocol

- Using ChatGPT to Generate a Sequence Diagram & Knowledge Graph about Digitally Signed Emails using S/MIME in Apple Mail