I wish to import several RDF named graphs from Quad store to the DAV files using the upload function. For considerably small graphs (up to 7k triples) everything works fine.

However, when I try to import a graph with 3mln triples the resulting file has zero size.

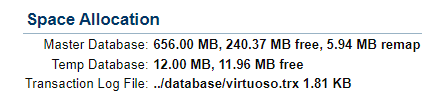

I see the free size of the DB is small, but I expect it may be adjusted dynamically,

How I could fix that problem, any server parameters should be updated ?

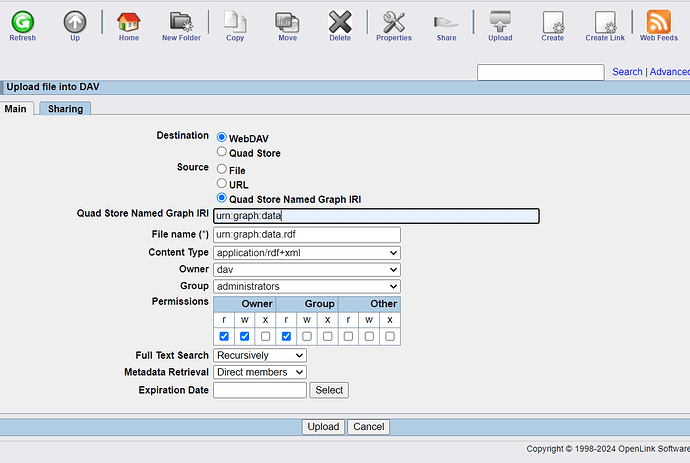

What is the upload function being used to import RDF named graphs from Quad store to the DAV files ?

The standard Upload function from DAV browser is used

What is the size of the individual files being uploaded as there is a 10MB limit on the size of files that can be uploaded to the Quad Store via DAV ? If you want to upload large RDF files then the Virtuoso RDF Bulk Loader should be used.

Thank you for noticing the file size limit.

In my case I make uploading from Quad to DAV, but I expect some limit for uploading to DAV also exists.

The size of the largest file uploaded to DAV so far was about 70MB.

On a newly deployed version of Open Link Virtuoso (07.20.3240) I am not able to upload to DAV a graph from Virtuoso Quad Store with the size of 850kB. The uploaded file in the DAV folder has zero size.

I have another deployment of the same Virtuoso version where uploads of the same graph work fine. There is no any log message displaying the error. I made configurations of both deployments identical, but that did not solve the problem.

What can be changed in configuration or investigated in the logs to allow successful uploads from Quad Store to DAV ?

Testing with my local Version: 07.20.3240 Build: Jul 3 2024 (a4349d4df) Linux build I can upload a 1MB RDF Graph from the RDF Quad Store to DAV successfully.

What is the gitid of your Virtuoso open source build on both machines ?

If the binary configuration and data are the same on both instances, might there be a problem with the database of the problem instance. I would suggest running a database integrity check with the backup '/dev/null'; command run from isql to check for any possible corruption in the database.

Do both instances contain exactly the same data in their databases ?

The trace_on() command can also be enabled to write additional trace information the log file on the problem instance to see if an errors or other information might of interest might be written to the log.

Hello, Hugh

Thank you for the hints.

I use a standard Docker image of Virtuoso in Docker and K8 without any builds.

Integrity check was done without any errors.

After activating trace option I was able to detect the following error message

2024-11-22 09:20:09 09:20:09 COMP_2 dba 172.17.0.1 Internal Compile text: sparql define output:format 'RDF/XML' construct { ?s ?p ?o } where { graph <urn:graph:onto> { ?s ?p ?o } }

2024-11-22 09:20:09 09:20:09 ERRS_0 42000 D1CTX Hash dictionary is full, exceeded 10000 entries

may be it relevant for the problem stated and how it could be fixed ?

…

Finally the problem was fixed using the post Virtuoso 42000 Error D1CTX after using curl to retrieve RDF data from dbpedia.org - Stack Overflow

However, to apply new settings we have to restart the instance. It seems that simple change of SPARQL parameters in the web-interface does not have effect, because we increased the value of that parameter before, but did not restart the Virtuoso server.

Could you please clarify should we aways restart the Virtuoso container in order to make the parameters’ changes to be effective ?

The following Virtuoso configuration file parameters to limit the number of triples in SPARQL query post also details how to get around the ERRS_0 42000 D1CTX Hash dictionary is full, exceeded 10000 entries error.

When a changed in made to the virtuoso.ini configuration file the database does need to be restarted for the setting to take effect.

In my particular case I made changes in SPARQL parameters using the web interface. I was able to see that new values appeared in the .ini file. But until restart of the docker container they were not effective. For K8 case I can also confirm that restart of the pod has to be done to apply new values of SPARQL parameters.